Building Workflows in PHP

Almost any business requires workflows. Whether you're processing orders, onboarding customers, or handling document approvals, these processes are the beating heart of your application. Yet for most PHP developers, workflows become sources of frustration rather than competitive advantages. Often becoming the most complex part of the system with hard to follow flows, flaky and complex tests, and code complexity which requires expert knowledge of the System in order to make safe changes.

In this article we will tackle how we can build Workflows that are opposite of all the above. We will take a look on workflows that are easy to maintain and follow, which can scale up to the needs of most most demanding environments, and are reliable by nature. But before we will jump to this, let's first discuss traditional approaches to the problem of Workflows.

Why Traditional Approaches Fail

Many developers try to solve Workflows by creating elaborate service layers:

class OrderProcessingService

{

public function __construct(

private ValidationService $validator,

private PaymentService $payment,

private InventoryService $inventory,

private ShippingService $shipping,

private NotificationService $notification,

private DiscountService $discount,

private AuditService $audit

) {}

public function process(Order $order): void

{

$this->validator->validate($order);

$this->payment->process($order);

$this->inventory->reserve($order);

$this->shipping->schedule($order);

$this->notification->send($order);

$this->audit->log($order);

}

}

This looks clean on the surface, but there is fundamental problem with this approach, we've mixed up all the steps together, not giving ourselves a chance to isolate the processing steps. Creating a workflow that is easy to break and hard to recover:

- Error handling becomes cumbersome - what happens when scheduling shipping step fails, how do we handle the failure?

- Single processing affects overall timing - What if payment processing will take more time than usual, or will time out completely?

- No failure isolation - What if a Notification Service is down completely and we can't recover, how can we resume the flow when the Service will be back?

That kind of code will most likely work smooth in development environment, yet can quickly backfire in production. Creating a need for time consuming recovery from the problems it created. Therefore this kind of code will be just beginning of the journey, leading to some more sophisticated solutions.

The State Machine Complexity Explosion

Desperate to escape service layer chaos, many teams turn to state machines. "Finally," they think, "a structured approach to workflow management"

However state machines demand extensive upfront either in PHP or YAML, which can quickly become hard to maintain:

workflows:

order_processing:

type: 'state_machine'

audit_trail:

enabled: true

marking_store:

type: 'method'

property: 'currentState'

supports:

- App\Entity\Order

initial_marking: draft

places:

- draft

- payment_pending

- payment_failed

- payment_completed

- inventory_reserved

- inventory_failed

- shipping_scheduled

- shipped

- completed

- cancelled

- refunded

transitions:

start_payment:

from: draft

to: payment_pending

process_payment:

from: payment_pending

to: [payment_completed, payment_failed]

retry_payment:

from: payment_failed

to: payment_pending

reserve_inventory:

from: payment_completed

to: [inventory_reserved, inventory_failed]

# ... 15 more transitions for a "simple" order process

Where is the behaviour?

State machines solve entity state management, but workflows are about behaviour not state.

// State machines ask: "What state is this entity in?"

$currentState = $workflow->getMarking($order)->getPlaces();

// But the actual question that will give us knowledge is: "What happens when we process an order?"

// State machines focus on STATES and TRANSITIONS

$workflow->apply($order, 'process_payment');

$workflow->apply($order, 'reserve_inventory');

// Business workflows and processes focus on STEPS and OUTCOMES

// "First validate, then process payment, then reserve inventory"

// This intent is lost if we put equal sign between state machine and workflow

With Business Workflows we focus on behaviour, yet with State Machines we actually focus (as the name implies) on state - data. Therefore instead of our business workflow step indicating behaviour, it indicates state changes.

This means the actual thing for which we build the workflow - "behaviour", becomes pushed the pushes on the edges, and coupled with Framework transition events:

class OrderTransitionHandler

{

#[AsEventListener(event: 'workflow.order_processing.transition.process_payment')]

public function onProcessPayment(TransitionEvent $event): void

{

// Actual reason for which we build workflow - "behaviour", become hidden in transition event handlers

// pushing the "state" on the front, and "behaviour" to the back.

// And binding us to framework events, in order to trigger the behaviour

$order = $event->getSubject();

if ($order->getCustomer()->isPremium()) {

$this->applyPremiumDiscount($order);

$this->schedulePriorityProcessing($order);

}

try {

$this->paymentService->process($order);

} catch (PaymentException $e) {

// Error handling becomes complex state management

$event->getWorkflow()->apply($order, 'payment_failed');

throw $e;

}

}

#[AsEventListener(event: 'workflow.order_processing.transition.reserve_inventory')]

public function onReserveInventory(TransitionEvent $event): void

{

// more business logic hidden in event handlers

}

}

State machines focus on state, not the behaviour. Therefore they may act as supporting tool for visibility, but they are not good candidates for workflow orchestrators.

Enter Ecotone's Orchestrator

Ecotone introduces Enterprise feature called Orchestrator, which promotes building Workflows in visible and maintainable way.

class OrderOrchestrator

{

#[Orchestrator(inputChannelName: "process.order")]

public function processOrder(Order $order): array

{

return [

"validate.order",

"process.payment",

"reserve.inventory",

"send.confirmation",

"audit.transaction"

];

}

}

With Orchestrator, the business process became the code itself. No hidden logic, no scattered implementations, no complex state management - just pure, explicit business intent.

Orchestrator defines the Workflows in clear and understandable way, and is separated from the actual step implementation. Making it easy to do modifications and changes.

Implementing Steps: Clean, Focused, and Testable

Each workflow step becomes a focused, independently testable unit:

class OrderProcessingSteps

{

#[InternalHandler(inputChannelName: "validate.order")]

public function validateOrder(Order $order): Order

{

if (!$order->hasItems()) {

throw new InvalidOrderException('Order must contain items');

}

if (!$order->hasValidPaymentMethod()) {

throw new InvalidOrderException('Valid payment method required');

}

return $order;

}

#[InternalHandler(inputChannelName: "process.payment")]

public function processPayment(Order $order, PaymentService $paymentService): Order

{

$result = $paymentService->charge(

$order->getTotal(),

$order->getPaymentMethod()

);

return $order->markAsPaid($result->getTransactionId());

}

#[InternalHandler(inputChannelName: "audit.transaction")]

public function auditTransaction(Order $order, AuditService $auditService): Order

{

$auditService->logOrderProcessing($order, [

'customer_type' => $order->getCustomer()->getType(),

'total_amount' => $order->getTotal(),

'processing_time' => microtime(true) - $order->getProcessingStartTime()

]);

return $order;

}

}

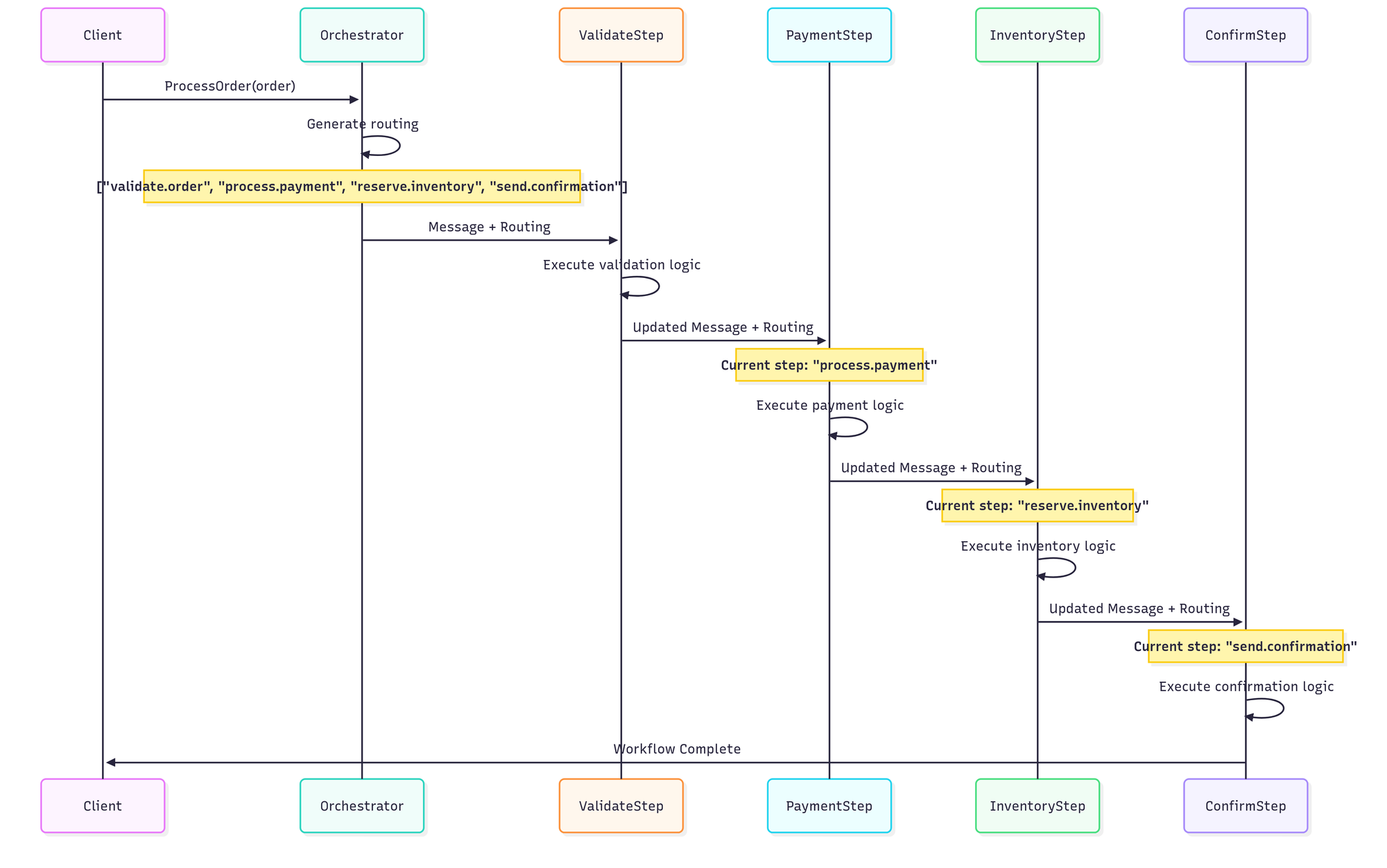

Understanding how Orchestrator achieves this simplicity requires looking at its architecture. Unlike traditional workflow engines that maintain complex state machines or persistent workflow instances, Orchestrator uses a stateless routing slip pattern that eliminates the need for state management entirely.

The Routing Pattern: Carrying Intent in Messages

At its core, Orchestrator works by embedding the workflow steps directly into the message headers. This approach, known as the Routing Slip pattern, transforms each message into a self-contained execution plan:

Benefits of Stateless Routing Approach

Why Stateless Changes Everything: The Database-Free Approach

Traditional workflow engines create a maintenance nightmare by storing workflow state in databases. Every running workflow becomes a database record that must be managed, migrated, and eventually cleaned up.

Here's what traditional workflow engines force you to manage:

-- Workflow instances table grows endlessly

CREATE TABLE workflow_instances (

id BIGINT PRIMARY KEY,

workflow_type VARCHAR(100),

current_state VARCHAR(50),

created_at TIMESTAMP,

updated_at TIMESTAMP,

execution_context JSON, -- Can become massive

step_history JSON, -- Grows with each step

status VARCHAR(20)

);

-- Result: Millions of records for busy applications

-- Complex queries to find stuck workflows

-- Expensive cleanup procedures

-- Database migrations when workflow logic changes

The hidden costs are staggering:

- Database Growth: Workflow tables can grow indefinitely

- Cleanup Complexity: Determining which workflows can be safely deleted becomes a complex operation

- Query Performance: Finding and managing workflow instances requires expensive database queries

Orchestrator eliminates all of this complexity. Each Message carry its own routing information, which is used to determine next step. Therefore there is no need to store and manage workflow state anymore. Meaning no database storage is involved in workflow processing.

Scaling Without the Complexity

Traditional workflow engines create challenges when it comes to scaling, as workflow state must be shared across servers. This leads to required coordination, extra database operations, and data synchronizations, which limit ability to scale.

With Ecotone's Orchestrators each step can be processed without any workflow related synchronization and database queries, as next steps are embedded into Message itself.

The scaling benefits are clear:

- Horizontal Scaling: New servers can process workflows in isolation as there is no shared Workflow state

- Processing isolation: Step execution is completely independent: No inter-server communication, synchronization, and database locking mechanisms needed

- Greater Performance : We avoid fetching and storing the Workflow state with each step

This stateless architecture is what makes Orchestrator so powerful - it eliminates the complexity that workflow engines introduced while providing flexibility and performance.

Zero-Migration Workflow Changes

The stateless nature means workflow changes are immediately effective. This means there is no need to migrate and keep compatibility between changes. In flight workflow will finish their execution based on previously defined steps, and new ones will follow changed format. This way of isolated handling eliminates challenges of deploying any changes to the workflow, and provides ability for A/B testing any kind of flow with ease.

// Version 1: Original workflow

#[Orchestrator(inputChannelName: "process.order")]

public function processOrderV1(Order $order): array

{

return [

"validate.order",

"process.payment",

"send.confirmation"

];

}

// Deployed Version 2: Enhanced workflow - NO MIGRATION REQUIRED

#[Orchestrator(inputChannelName: "process.order")]

public function processOrderV2(Order $order): array

{

$workflow = ["validate.order", "process.payment"];

// New business logic - works immediately

if ($order->getCustomer()->isPremium()) {

$workflow[] = "apply.premium.benefits";

}

$workflow[] = "send.confirmation";

return $workflow;

}

// All new orders use V2 immediately

// No existing workflow instances to migrate

// No complex deployment procedures

// No downtime required

Features

As we've discussed the stateless routing as the core of Orchestrator, we can now take a look at the features that Orchestrator provides.

Data Passing and Enriching: Flexible Context Management

One of Orchestrator's most powerful features is how it handles data flow between workflow steps. You have complete flexibility in how data moves through your workflow - either by enriching the main payload or by adding metadata without touching the original data.

Approach 1: Enriching the Main Payload

The most straightforward approach is to modify and enrich the main business object as it flows through the workflow:

class OrderProcessingSteps

{

#[InternalHandler(inputChannelName: "validate.order")]

public function validateOrder(Order $order): Order

{

if (!$order->hasItems()) {

throw new InvalidOrderException('Order must contain items');

}

// Return enriched order with validation timestamp

return $order->markAsValidated(new DateTime());

}

#[InternalHandler(inputChannelName: "process.payment")]

public function processPayment(Order $order, PaymentService $paymentService): Order

{

$result = $paymentService->charge(

$order->getTotal(),

$order->getPaymentMethod()

);

// Enrich order with payment details

return $order

->markAsPaid($result->getTransactionId())

->addPaymentTimestamp($result->getProcessedAt())

->setPaymentReference($result->getReference());

}

#[InternalHandler(inputChannelName: "apply.premium.benefits")]

public function applyPremiumBenefits(Order $order): Order

{

// Directly modify the order object

return $order

->addDiscount(0.15)

->addFreeShipping()

->addPrioritySupport()

->addExtendedWarranty();

}

}

When to use payload enrichment:

- The additional data becomes part of the business object's state

- Subsequent steps need the enriched data as part of the main object

- You want a single, comprehensive result object

Approach 2: Adding Metadata Without Changing Original Payload

Sometimes you need additional context for processing without modifying the original business object. Use changingHeaders: true to add metadata:

class OrderEnrichmentSteps

{

#[InternalHandler(

inputChannelName: "enrich.customer.context",

changingHeaders: true

)]

public function enrichCustomerContext(Order $order): array

{

$customer = $order->getCustomer();

// Return metadata that becomes message headers

return [

'customerTier' => $customer->getTier(),

'loyaltyPoints' => $customer->getLoyaltyPoints(),

'riskScore' => $this->riskService->calculateScore($customer),

'purchaseHistory' => $this->orderService->getCustomerHistory($customer->getId()),

'creditLimit' => $this->creditService->getLimit($customer->getId())

];

}

#[InternalHandler(inputChannelName: "calculate.pricing")]

public function calculatePricing(

Order $order, // Original order unchanged

#[Header('customerTier')] string $tier,

#[Header('loyaltyPoints')] int $points,

#[Header('riskScore')] int $riskScore

): Order {

// Use metadata for business logic without polluting the order object

$discount = 0;

if ($tier === 'PREMIUM' && $points > 1000) {

$discount = 0.15; // 15% premium discount

} elseif ($tier === 'GOLD' && $points > 500) {

$discount = 0.10; // 10% gold discount

}

// Apply risk-based adjustments

if ($riskScore > 80) {

$discount = max(0, $discount - 0.05); // Reduce discount for high-risk customers

}

return $order->applyDiscount($discount);

}

#[InternalHandler(inputChannelName: "finalize.order")]

public function finalizeOrder(

Order $order,

#[Header('processedAt')] DateTime $processedAt,

#[Header('executionId')] string $executionId,

PaymentService $paymentService

): Order {

$paymentService->makePayment($order);

return $order->markAsCompleted();

}

}

When to use metadata enrichment:

- Additional data is needed for processing logic but shouldn't be part of the business object

- You want to keep the original payload clean and focused

- Multiple steps need different contextual information

- Audit trails, processing metadata, or temporary calculations

Dynamic Routing: Runtime Workflow Construction

Real business processes aren't static. Customer types evolve, regulations change, and new requirements emerge constantly. Orchestrator handles this complexity naturally:

#[Orchestrator(inputChannelName: "process.order")]

public function processOrder(Order $order): array

{

// Base workflow steps

$workflow = ["validate.order", "process.payment"];

// Dynamic routing based on business rules

if ($order->getCustomer()->isPremium()) {

$workflow[] = "apply.premium.discount";

$workflow[] = "priority.inventory.check";

if ($order->getTotal() > 1000) {

$workflow[] = "executive.approval";

}

}

// International orders need additional steps

if ($order->isInternational()) {

$workflow[] = "customs.documentation";

$workflow[] = "international.shipping.calculation";

}

// Common final steps

$workflow[] = "reserve.inventory";

$workflow[] = "send.confirmation";

// Workflow can be customized dynamically per each Customer separately

return $workflow;

}

The same orchestrator elegantly handles premium customers, international customers - each with their specific requirements clearly expressed and easily modifiable.

Asynchronous Workflow Step

Some workflow steps are naturally resource-intensive - image processing, external API calls, email campaigns, data analysis. Orchestrator makes asynchronous processing trivial:

class MediaProcessingOrchestrator

{

#[Orchestrator(inputChannelName: "process.media.upload")]

public function processMediaUpload(): array

{

return [

"validate.file.format", // Fast - runs synchronously

"scan.for.malware", // Medium - runs synchronously

"resize.image", // Slow - runs asynchronously

"generate.thumbnails", // Slow - runs asynchronously

"extract.metadata", // Medium - runs asynchronously

"upload.to.cdn", // Slow - runs asynchronously

"update.database", // Fast - runs synchronously

"notify.user.completion" // Fast - runs synchronously

];

}

// Heavy processing runs asynchronously without blocking

#[Asynchronous('media_processing')]

#[InternalHandler(inputChannelName: "resize.image")]

public function resizeImage(MediaUpload $upload, ImageProcessor $processor): MediaUpload

{

$resizedPath = $processor->resize($upload->getPath(), [

'large' => [1920, 1080],

'medium' => [1280, 720],

'small' => [640, 360]

]);

return $upload->withResizedPath($resizedPath);

}

#[Asynchronous('media_processing')]

#[InternalHandler(inputChannelName: "generate.thumbnails")]

public function generateThumbnails(MediaUpload $upload, ThumbnailGenerator $generator): MediaUpload

{

$thumbnails = $generator->generate($upload->getPath(), [

'preview' => [300, 200],

'icon' => [64, 64]

]);

return $upload->withThumbnails($thumbnails);

}

}

The power is in the simplicity: Mix synchronous and asynchronous steps based purely on business needs and performance requirements, not technical limitations or architectural constraints.

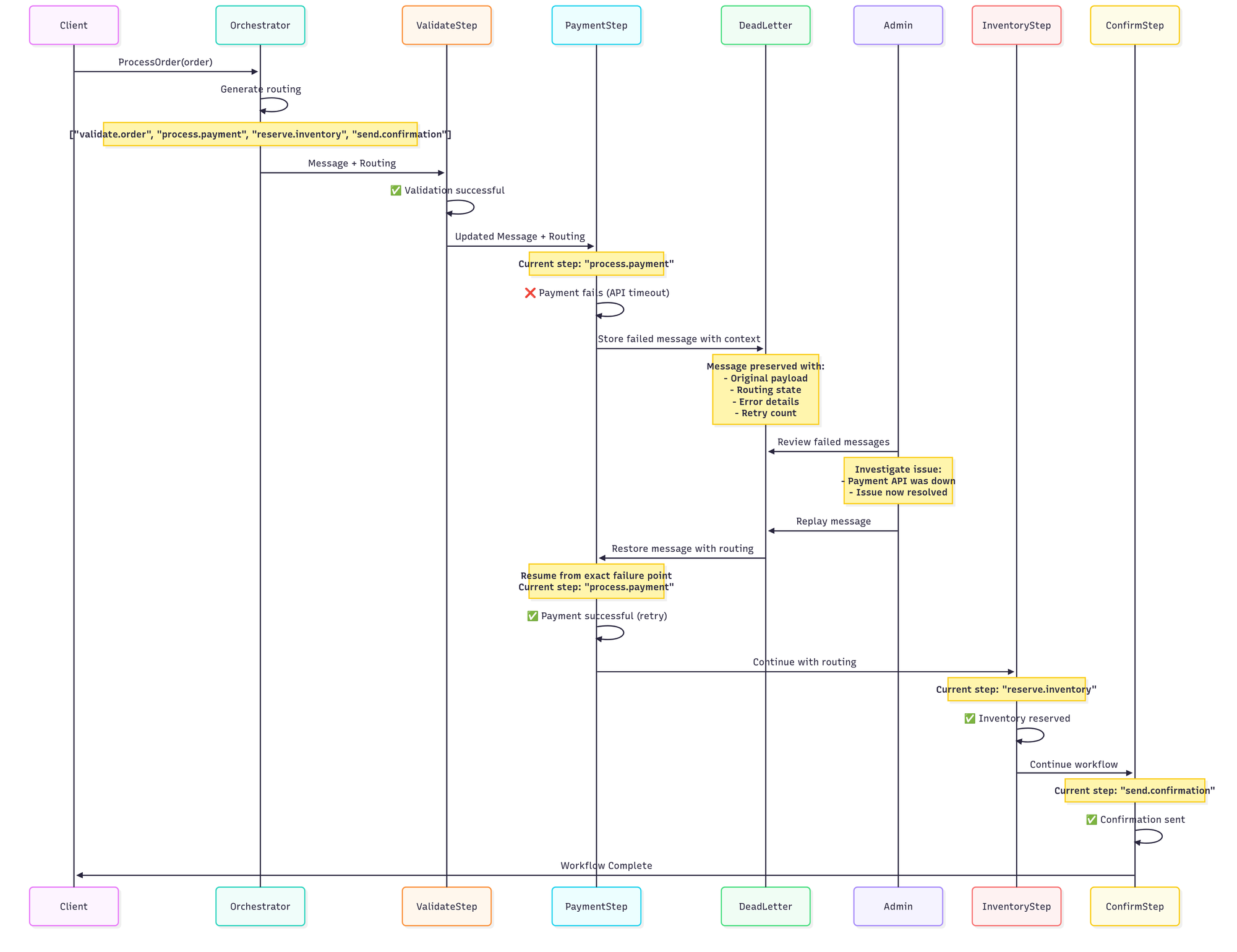

Error Handling and Recoverability

If our Message fails we can use Error Channel to handle the failure. This way we can preserve the Message even if we can't handle it at given moment.

We can for example push Error Message to Dead Letter to store the Message for later review, or add some customized retry mechanism to retry the Message again.

Here's how error handling and recovery works in practice:

Key benefits of this error handling approach:

- No Lost Work: Failed messages are preserved with complete context

- Exact Resume Point: Workflows resume from the exact step that failed, not from the beginning

- Full Context Preservation: Original payload, routing state, and error details are maintained

- Administrative Control: Failed messages can be reviewed, modified, and replayed manually

- Automatic Retry Options: Configure automatic retry with exponential backoff

Executing Orchestrators: Multiple Entry Points for Maximum Flexibility

Ecotone provides several ways to trigger orchestrated workflows, each designed for different use cases and integration patterns. Let's explore the most common approaches.

Business Interface: Clean API for Predefined Workflows

The most straightforward way to execute orchestrators is through dedicated business interfaces. This approach provides a clean, type-safe API that encapsulates workflow execution:

interface OrderProcessingService

{

#[BusinessMethod(inputChannelName: "process.order")]

public function processOrder(Order $order): ProcessingResult;

}

// The orchestrator that handles the workflow

class OrderOrchestrator

{

#[Orchestrator(inputChannelName: "process.order")]

public function processOrder(Order $order): array

{

$workflow = [

"validate.order",

"process.payment",

"reserve.inventory"

];

if ($order->getCustomer()->isPremium()) {

$workflow[] = "apply.premium.benefits";

$workflow[] = "expedite.shipping";

} else {

$workflow[] = "schedule.standard.shipping";

}

$workflow[] = "send.confirmation";

$workflow[] = "audit.transaction";

return $workflow;

}

}

// Usage in your application

class OrderController

{

public function __construct(

private OrderProcessingService $orderProcessor

) {}

public function processOrder(Request $request): JsonResponse

{

$order = Order::fromRequest($request);

// Clean, simple workflow execution

$result = $this->orderProcessor->processOrder($order);

return new JsonResponse([

'order_id' => $result->getOrderId(),

'status' => $result->getStatus(),

'estimated_delivery' => $result->getEstimatedDelivery()

]);

}

}

Benefits of Business Interface approach:

- Clean API: Business-focused method names that express intent clearly

- Documentation: Self-documenting through interface contracts

- Encapsulation: Workflow complexity hidden behind simple method calls

Event-Driven Orchestration: Reactive Workflow Triggers

For event-driven architectures, orchestrators can be triggered automatically when specific events occur. This approach is perfect for workflows that should start in response to domain events:

class UserVerificationEventHandler

{

// Event is propagated to the orchestrator

#[EventHandler(outputChannelName: "verify.user.account")]

public function verifyUserAccount(UserRegistered $event): array

{

return $event;

}

}

class UserVerificationOrchestrator

{

#[Orchestrator(inputChannelName: "verify.user.account")]

public function onUserRegistered(UserRegistered $event): array

{

$user = $event->getUser();

// Build verification workflow based on user type

$workflow = ["send.welcome.email"];

if ($user->requiresEmailVerification()) {

$workflow[] = "send.email.verification";

$workflow[] = "wait.for.email.confirmation";

}

if ($user->requiresPhoneVerification()) {

$workflow[] = "send.sms.verification";

$workflow[] = "wait.for.sms.confirmation";

}

if ($user->isEnterprise()) {

$workflow[] = "schedule.onboarding.call";

$workflow[] = "assign.account.manager";

$workflow[] = "setup.enterprise.features";

}

$workflow[] = "activate.user.account";

$workflow[] = "send.activation.confirmation";

$workflow[] = "track.registration.metrics";

return $workflow;

}

}

Benefits of Event-Driven approach:

- Reactive Architecture: Workflows start automatically when events occur

- Scalability: Events can trigger multiple workflows independently

- Business Alignment: Workflows triggered by actual business events

The same approach can be achieved with Command Handlers and even Query Handlers.

Orchestrator Gateways: Ultimate Flexibility for Dynamic Execution

For maximum business agility, use Orchestrator Gateways to construct and execute workflows at runtime. This approach is perfect when you need to build workflows dynamically based on incoming requests or external criteria:

interface DocumentProcessingGateway

{

#[OrchestratorGateway]

public function processDocument(array $steps, Document $document): ProcessingResult;

}

// HTTP Controller that builds workflows based on request parameters

class DocumentController

{

public function __construct(

private DocumentProcessingGateway $documentGateway

) {}

// Example API usage:

// POST /documents/process

// {

// "document": {...},

// "requires_approval": true,

// "priority": "urgent"

// }

//

public function processDocument(Request $request): JsonResponse

{

$document = Document::fromRequest($request);

// Build workflow dynamically based on request parameters and document properties

$steps = ["validate.document", "extract.content"];

// Add approval steps based on document value and type

if ($request->has('requires_approval') || $document->getValue() > 5000) {

$steps[] = "legal.review";

// Executive approval for high-value documents

if ($document->getValue() > 100000) {

$steps[] = "executive.approval";

$steps[] = "board.notification";

} elseif ($document->getValue() > 10000) {

$steps[] = "manager.approval";

}

}

// Priority processing for urgent requests

if ($request->get('priority') === 'urgent') {

$steps[] = "priority.processing";

$steps[] = "expedite.review";

}

// Final processing steps

$steps[] = "finalize.document";

// Execute the dynamically built workflow

$this->documentGateway->processDocument($steps, $document);

return new JsonResponse([]);

}

-----------------------------

// Example API of allowing clients to define the workflow:

//

// POST /documents/custom-workflow

// {

// "document": {...},

// "workflow_steps": [

// "validate.document",

// "legal.review",

// "executive.approval",

// "apply.security.measures",

// "finalize.document"

// ]

// }

public function processCustomWorkflow(Request $request): JsonResponse

{

$document = Document::fromRequest($request);

// Allow clients to specify custom workflow steps via API

$customSteps = $request->get('workflow_steps', []);

// Validate and sanitize custom steps

$allowedSteps = [

'validate.document', 'extract.content', 'legal.review',

'manager.approval', 'executive.approval', 'apply.security.measures',

'audit.access', 'finalize.document', 'notify.stakeholders'

];

// Execute custom workflow

$this->documentGateway->processDocument($steps, $document);

return new JsonResponse([]);

}

}Orchestrator Gateways are automatically registered in the Dependency Container and can be auto-wired into any service. Whatever steps you provide to the Gateway will be executed in the given order, enabling you to build any workflow based on incoming requests or business criteria.

Real-world benefits:

- API-Driven Workflows: Clients can influence workflow execution through request parameters

- A/B Testing: Different workflow variations based on user segments or feature flags

- Customer-Specific Processing: Tailored workflows for different customer tiers or contracts

Collecting and Returning Data

For synchronous workflows, Orchestrator can collect data from multiple steps and return a comprehensive result. Ecotone automatically handles message passing between steps and returns the final result from the last workflow step:

interface ReportGenerationService

{

#[BusinessMethod(inputChannelName: "generate.customer.report")]

public function generateCustomerReport(CustomerId $customerId): CustomerReport;

}

class ReportOrchestrator

{

#[Orchestrator(inputChannelName: "generate.customer.report")]

public function generateCustomerReport(CustomerId $customerId): array

{

return [

"fetch.customer.data",

"calculate.customer.metrics",

"generate.purchase.history",

"analyze.customer.behavior",

"compile.final.report"

];

}

}

// Each step enriches the data and passes it to the next step

class ReportGenerationSteps

{

#[InternalHandler(inputChannelName: "fetch.customer.data")]

public function fetchCustomerData(CustomerId $customerId, CustomerRepository $repository): ReportData

{

$customer = $repository->find($customerId);

return new ReportData(

customer: $customer,

generatedAt: new DateTime(),

reportType: 'customer_analysis'

);

}

(...)

#[InternalHandler(inputChannelName: "compile.final.report")]

public function compileFinalReport(ReportData $data): CustomerReport

{

// This is the final step - its return value becomes the workflow result

return new CustomerReport(

customerId: $data->customer->getId(),

customerName: $data->customer->getName(),

generatedAt: $data->generatedAt,

metrics: $data->metrics,

purchaseHistory: $data->purchaseHistory,

behaviorInsights: $data->behaviorInsights,

summary: $this->generateSummary($data)

);

}

}

// Usage in controller

class ReportController

{

public function __construct(

private ReportGenerationService $reportService

) {}

public function generateReport(string $customerId): JsonResponse

{

// The workflow executes all steps and returns the final CustomerReport

$report = $this->reportService->generateCustomerReport(new CustomerId($customerId));

return new JsonResponse([

'customer_id' => $report->getCustomerId(),

'customer_name' => $report->getCustomerName(),

'generated_at' => $report->getGeneratedAt()->format('Y-m-d H:i:s'),

'lifetime_value' => $report->getMetrics()['lifetime_value'],

'churn_risk' => $report->getMetrics()['churn_risk'],

'total_orders' => count($report->getPurchaseHistory()),

'preferred_categories' => $report->getBehaviorInsights()['preferred_categories'],

'summary' => $report->getSummary()

]);

}

}

Key benefits of data collection workflows:

- Automatic Data Flow: Ecotone handles passing enriched data between workflow steps

- Incremental Building: Data is progressively enriched as it flows through the workflow

- Final Result: The last step's return value becomes the workflow's final result

Real-world use cases:

- Report Generation: Collect data from multiple sources and compile comprehensive reports

- Data Enrichment: Progressively enhance data with information from various services

- Calculation Pipelines: Perform complex calculations that require multiple steps

- Validation Workflows: Validate data through multiple validation layers

- Aggregation Processes: Combine data from different domains into unified results

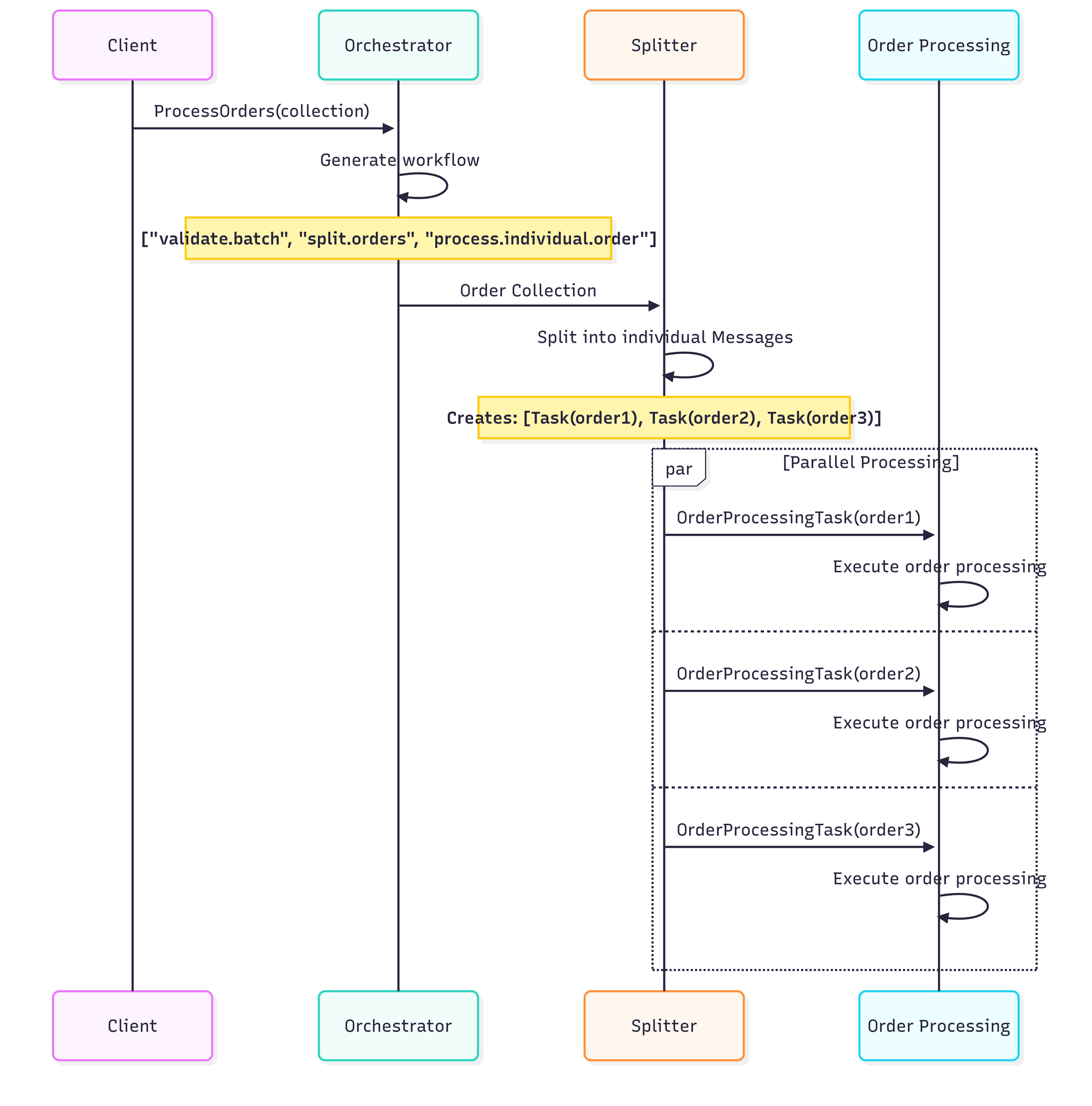

Using Splitters for Batch Parallel Processing

Splitters excel at breaking down collections into individual items that can be processed concurrently. This way we can easily create batches of Messages to process as part of our workflow.

class BatchOrderOrchestrator

{

#[Orchestrator(inputChannelName: "process.order.batch")]

public function processBatch(OrderBatch $batch): array

{

return [

"validate.batch",

"split.orders", // Splitter step - breaks batch into individual orders

"process.individual.order"

];

}

}

class OrderBatchSplitter

{

#[Splitter(inputChannelName: "split.orders")]

public function splitOrders(OrderBatch $batch): array

{

// Splitter returns array where each item becomes separate Message to process

// Each order will be processed in parallel

return $batch->getOrders();

}

#[Asynchronous('order_processing')]

#[InternalHandler(inputChannelName: "process.individual.order")]

public function processIndividualOrder(Order $order): void

{

$pricedOrder = $this->pricingService->calculatePricing($validatedOrder);

$processedOrder = $this->paymentService->processPayment($pricedOrder);

}

}This is powerful yet easy to use concept, where we can by simple returning array tell Ecotone to parallel the work that's need to be done.

Key benefits of splitter-based parallel processing:

- True Concurrency: Multiple items processed simultaneously across async channels

- Resource Optimization: Heavy processing distributed across multiple async channels

- Scalable Architecture: Add more processor instances without changing workflow logic

- Fault Isolation: Failure in one parallel branch doesn't affect others

- Dynamic Processing: Processing steps determined at runtime based on item properties

Isolated Testing

Testing Orchestrator-based workflows is really simple, as Ecotone provides support in form of EcotoneLite.

It allows us to test our full workflow logic in isolation, where we can stub out any external dependencies if necessary.

public function test_premium_customer_receives_full_benefits(): void

{

$ecotoneLite = EcotoneLite::bootstrapForTesting([

OrderOrchestrator::class,

OrderProcessingSteps::class

]);

$premiumCustomer = new Customer(type: CustomerType::PREMIUM);

$order = new Order($premiumCustomer, [

new Item('laptop', Money::USD(1500)),

new Item('mouse', Money::USD(50))

]);

$ecotoneLite->sendDirectToChannel("process.order", $order);

// Verify business outcomes

$this->assertTrue($order->hasDiscount());

$this->assertTrue($order->hasFreeShipping());

$this->assertTrue($order->hasPrioritySupport());

$this->assertEquals(ShippingType::EXPEDITED, $order->getShippingType());

}

public function test_workflow_handles_payment_failure_gracefully(): void

{

$ecotoneLite = EcotoneLite::bootstrapForTesting([

OrderOrchestrator::class,

OrderProcessingSteps::class

], [

PaymentService::class => new FailingPaymentService()

]);

$order = new Order(new Customer(), [new Item('book', Money::USD(25))]);

$this->expectException(PaymentException::class);

$ecotoneLite->sendDirectToChannel("process.order", $order);

// Verify cleanup occurred

$this->assertFalse($order->isPaid());

$this->assertFalse($order->hasInventoryReserved());

}

Ecotone also provides ability to test out asynchronous steps with In Memory Channels.

Therefore even the most sophisticated workflows can be tested fully and in isolation.

Summary - The Core Concepts

When using Ecotone's Orchestrator, workflow definitions become living documentation:

// This IS your business process - no hidden logic, no scattered implementations

return [

"validate.order",

"process.payment",

"apply.premium.benefits", // Clear business intent

"expedite.shipping", // Explicit business rules

"send.confirmation"

];The stateless routing pattern eliminates the complexity that has plagued workflow engines.

As there is no state involved, we can deploy workflow changes with zero downtime and need for migrations. Making it possible to deploy workflow changes as part of regular application deployments, which do not require any additional procedures.

- Zero Migration Headaches: Change workflows instantly without database migrations

- Effortless Horizontal Scaling: Any server can process any workflow step

- No State Management: No workflow instances, no cleanup, no orphaned processes

Due to ability to define workflows dynamically, we can build really flexible and adaptive systems, which can even be configured per each customer separately:

// Different customers get different workflows automatically

if ($order->getCustomer()->isPremium()) {

$workflow[] = "apply.premium.benefits";

$workflow[] = "expedite.shipping";

}

if ($order->isInternational()) {

$workflow[] = "customs.documentation";

}Together with that we are able to choose the right execution approach for each use case. As Orchestrator provides:

- Business Interfaces: Type-safe APIs for direct workflow execution

- Event-Driven: Reactive workflows triggered by domain events

- Orchestrator Gateways: Runtime workflow construction from HTTP requests

- Data Collection: Synchronous workflows that return enriched results

You don't need to rewrite your entire application overnight to use Ecotone's Orchestrators. You can start with one workflow and expand systematically.

Deploying Ecotone changes doesn't require any additional procedures, as it seamlessly integrates with Symfony, Laravel and other frameworks using Ecotone Lite support.

Therefore testing it out even in production comes with only few steps to be done, which are most about the Workflow you define, rather than the framework itself.